By: Andrew McNeill, Annina Huhtala, Viviana Caro, Kate Schwarz, and Henry Lee

“Your memory is a monster; you forget – it doesn’t. It simply files things away”, wrote John Irving in his enticing novel A Prayer for Owen Meany. But how do memories form in the first place? And could the storyteller be right, does our brain store more than we can actively evoke?

According to current understanding in neuroscience, we are tracking our environment at a rate of milliseconds, whether it be what we see, touch, taste, smell, or hear. This process is fully automatic, mostly unconscious, and we can’t switch it off, as it has evolved to keep us safe. When we take a walk in a park, our brains keep calculating probabilities about what will happen next and compare the present moment to past experiences. When we hear footsteps from behind, we assume they belong to a runner, not to a hungry beast, and we keep calm. It seems that sensory data is not something we actively remember, but it’s stored in our long-term memory.

Neuroscientist Roberta Bianco and her collaborators are shedding light on these mechanisms with the help of rapid sound patterns. In her recent study, she tested participants with soundtracks that included only a few milliseconds-long tones, to see whether people implicitly form long-term memories by just being exposed to such arbitrary auditory stimuli. The results? People detected these very rapid patterns embedded in novel sound excerpts even after seven weeks of first hearing them. Bianco’s research shows the idea that humans have the ability to implicitly store in memory a meticulous representation of our sound environment.

Auditory memory is often documented and intuitively noted in the context of music – e.g. how quickly we remember musical tunes despite hearing them only a few times. Most forms of music have sequences and patterns that contain groups of notes and rhythms. Whenever we hear music, our auditory system does a great job at recognising these patterns and spotting any unexpected notes or chords. This effortless processing is called statistical learning, and it affects how we listen to and memorise music. We implicitly learn ‘normal’ sequences and patterns in music and build predictive models to estimate what will happen next and to notice irregularities.

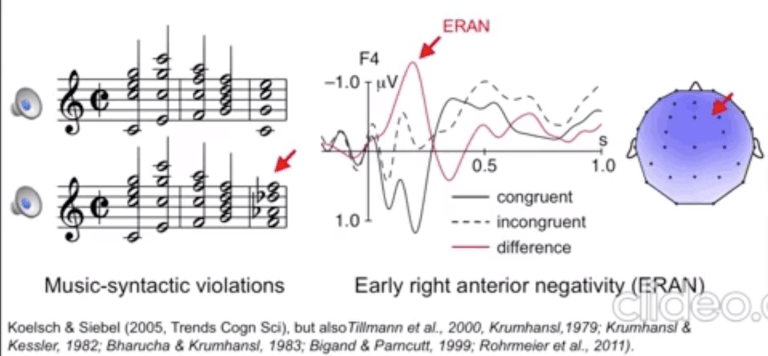

In a study by Koelsch and colleagues, a group of participants, who were non-musicians, passively listened to two chord progressions while the electrical activity in their brains was recorded using electroencephalography, EEG. The chord progressions ended in either an expected chord or unexpected chord. (e.g., a D flat major chord within a C major chord progression is unexpected). When a chord violates Western music theory rules, the brain notices. In the experiment, participants’ brains showed a strong, negative response to the incongruent endings. The incongruent chord being a violation to the progression they expected.

In a recent study, Bianco and colleagues had expert pianists play novel chord sequences without hearing the sound. The results showed that the incongruent endings activated temporal-frontal networks. These brain areas are associated with prediction violations during music listening, but light up even during silent performance – demonstrating that the brain uses predictive models of acquired patterns or structures not only when we sense the world but also when we act on it.

To build strong predictive models, our brain needs long term exposure to melodic patterns. Our brain may encode and group the information into tiny ‘storage units’ or n-grams. The more we get exposed to a specific pattern, the stronger and more salient the n-grams become, incurring in fast retrieval of information. Most memories decay when time passes, but Bianco’s work indicates that when memory traces are reinforced, they last longer despite memory decay.

How precise are these predictive models then? To understand how reliable our memory really is, Bianco and colleagues exposed participants briefly to tone patterns and then tested how well they could recall them. Participants could recognise some patterns, but in most cases the results were relatively poor. Bianco then tested reaction times to reoccurring patterns. Results showed that repeating patterns were detected much faster. For Bianco, this lack of correlation between familiarity and reaction time indicates a dissociation between what we remember implicitly and the degree of which we can explicitly recall. It seems that our cautious brain preserves as much information as possible, even if that information is not relevant to the task at hand, and stocks it away in long-term memory. It does this to protect the capacity of our short-term memory. If short-term memory gets saturated, we are not able to adapt and control our behaviour in the present moment; therefore, storing information in the long-term memory is a way to economise cognitive resources.

For how long does our long-term memory store information? Bianco and her group set up an experiment where listeners heard both novel patterns, and patterns that had planted in them tone sequences that they had heard seven weeks before. Incredibly, the participants recognised the previously heard patterns, which were 1 second long and composed of 20 tones of 50ms duration, even after seven weeks!

Bianco’s research in music helps us to gain more understanding of how memory works and its implications are far reaching. Understanding the way our brain preserves sequential information, and the way it deteriorates could be essential not only in terms of extending our grasp on memory and its role in perception, but may also shed light on the perceptual consequence of failure of these systems – improving diagnosis and understanding of various cognitive impairments.

Our brain is very much like a computer; it can create and update complicated models. In addition, it can pick up data implicitly with our deliberate effort, and store it in our passive memory bank just in case we need it again. Our brain is also a bit like a jukebox player, that collects and archives all the songs and sounds from its environment. There is no disputing the facts, however, that this computer and jukebox give us the tools to make sense of our environment and make decisions about the world around us. After all, there is also some truth to how our memory is a monster, but there’s still a lot we don’t understand about this particular monster. Perhaps we should because, ultimately, it’s our beautiful monster that we’re taking care of.

References:

Bianco, R., Harrison, P. M. C., Hu, M., Bolger, C., Picken, S., & Marcus, T. (2020). Long-term implicit memory for sequential auditory patterns in humans. BioRxiv, https://doi.org/10.1101/2020.02.14.949404.

Cowan, N. (2008). What are the differences between long-term, short-term, and working memory?. Progress in Brain Research, 169, 323-338.

Conway, C. M., & Pisoni, D. B. (2008). Neurocognitive basis of implicit learning of sequential structure and its relation to language processing. Annals of the New York Academy of Sciences, 1145, 113–131. https://doi.org/10.1196/annals.1416.009.

Koelsch, S., Vuust, P., & Friston, K. (2019). Predictive Processes and the Peculiar Case of Music. Trends in Cognitive Sciences, 23(1), 63–77. https://doi.org/10.1016/j.tics.2018.10.006

Pearce, M. T. (2018). Statistical learning and probabilistic prediction in music cognition: Mechanisms of stylistic enculturation. Annals of the New York Academy of Sciences, 1423(1), 378–395. https://doi.org/10.1111/nyas.13654

Tillmann, B., Bigand, E., & Bharucha, J. J. (2000). Implicit Learning of Tonality: A Self-Organizing Approach. Psychological Review, 107(4), 885–913, https://doi.org/10.1037//0033-295X.107.4.885

research of the neuroscience of music @ www.saxophonetuition.com